#include <adam.h>

Inheritance diagram for bc::nn::Adam::Optimizer< Tensor >:

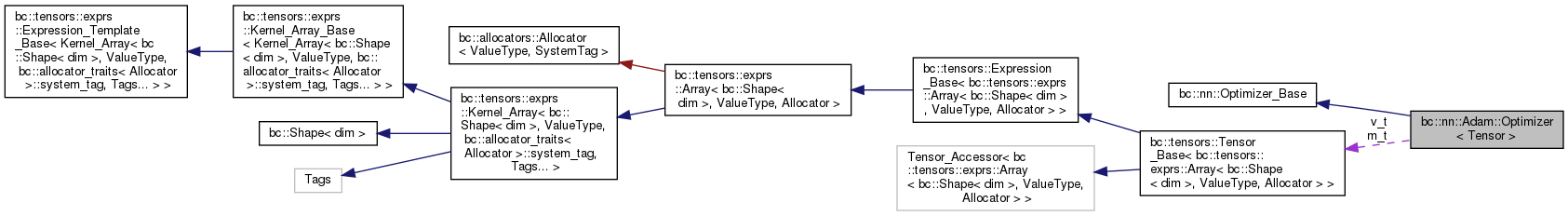

Collaboration diagram for bc::nn::Adam::Optimizer< Tensor >:

Public Types | |

| using | value_type = typename Tensor::value_type |

| using | system_tag = typename Tensor::system_tag |

Public Member Functions | |

| template<class... Args> | |

| Optimizer (Args &&... args) | |

| template<class TensorX , class Gradients > | |

| void | update (TensorX &tensor, Gradients &&delta) |

| void | set_learning_rate (value_type lr) |

| void | save (Layer_Loader &loader, std::string name) const |

| void | load (Layer_Loader &loader, std::string name) |

Public Member Functions inherited from bc::nn::Optimizer_Base Public Member Functions inherited from bc::nn::Optimizer_Base | |

| void | save (Layer_Loader &loader, std::string name) const |

| void | load (Layer_Loader &loader, std::string name) |

Public Attributes | |

| value_type | alpha = bc::nn::default_learning_rate |

| value_type | beta_1 = 0.9 |

| value_type | beta_2 = 0.999 |

| value_type | epsilon = 1e-8 |

| value_type | time_stamp = 0 |

| Tensor | m_t |

| Tensor | v_t |

Member Typedef Documentation

◆ system_tag

template<class Tensor >

| using bc::nn::Adam::Optimizer< Tensor >::system_tag = typename Tensor::system_tag |

◆ value_type

template<class Tensor >

| using bc::nn::Adam::Optimizer< Tensor >::value_type = typename Tensor::value_type |

Constructor & Destructor Documentation

◆ Optimizer()

template<class Tensor >

template<class... Args>

|

inline |

Member Function Documentation

◆ load()

template<class Tensor >

|

inline |

◆ save()

template<class Tensor >

|

inline |

◆ set_learning_rate()

template<class Tensor >

|

inline |

◆ update()

template<class Tensor >

template<class TensorX , class Gradients >

|

inline |

Member Data Documentation

◆ alpha

template<class Tensor >

| value_type bc::nn::Adam::Optimizer< Tensor >::alpha = bc::nn::default_learning_rate |

◆ beta_1

template<class Tensor >

| value_type bc::nn::Adam::Optimizer< Tensor >::beta_1 = 0.9 |

◆ beta_2

template<class Tensor >

| value_type bc::nn::Adam::Optimizer< Tensor >::beta_2 = 0.999 |

◆ epsilon

template<class Tensor >

| value_type bc::nn::Adam::Optimizer< Tensor >::epsilon = 1e-8 |

◆ m_t

template<class Tensor >

| Tensor bc::nn::Adam::Optimizer< Tensor >::m_t |

◆ time_stamp

template<class Tensor >

| value_type bc::nn::Adam::Optimizer< Tensor >::time_stamp = 0 |

◆ v_t

template<class Tensor >

| Tensor bc::nn::Adam::Optimizer< Tensor >::v_t |

The documentation for this struct was generated from the following file:

- blackcat/neural_networks/optimzers/adam.h

1.8.13

1.8.13