the Neural_Network More...

#include <network.h>

Public Types | |

| using | self = NeuralNetwork< Layers... > |

| using | is_recurrent = bc::traits::any< detail::is_recurrent_layer, Layers... > |

| using | layer_chain = LayerChain< bc::traits::Integer< 0 >, void, Layers... > |

Public Member Functions | |

| NeuralNetwork (Layers... layers) | |

| Basic Constructor for Neural Networks. More... | |

| template<class T > | |

| auto | forward_propagation (const T &tensor) |

| Calls forward propagation on each of the neural_network's layers The time index will be set to zero prior to each call forward_call. More... | |

| template<class T > | |

| auto | back_propagation (const T &tensor) |

| Calls back-propagation on each of the neural_network's layers The time index will be incremented after each call to back_propagation. More... | |

| template<class T > | |

| auto | predict (const T &tensor) |

| Returns the output of a single batch. More... | |

| template<class T > | |

| auto | single_predict (const T &tensor) |

| Returns the output of a single input. More... | |

| template<int X> | |

| auto & | get_layer () const |

| Returns a const reference to the layer specified by the given index. More... | |

| template<int X> | |

| auto & | get_layer () |

| Returns a reference to the layer specified by the given index. More... | |

| void | set_learning_rate (double lr) |

| Sets the learning for each layer in the Neural_Network. More... | |

| double | get_learning_rate () const |

| Returns the current global learning rate. More... | |

| void | set_batch_size (int batch_sz) |

| Sets the batch_size of the entire Neural_Network The intermediate values are discarded when setting the batch_size. More... | |

| void | copy_training_data_to_single_predict (int batch_index) |

| Copies the given batch_index from the training cell_state to the inference cell_state. More... | |

| void | zero_time_index () |

| Sets the expression_template time_index to zero. More... | |

| void | update_weights () |

| Updates the weights of each Layer based upon the current stored gradients. More... | |

| bc::size_t | input_size () const |

| returns the input_size of the first layer More... | |

| bc::size_t | output_size () const |

| returns the output_size of the last layer More... | |

| bc::size_t | batch_size () const |

| returns the batch_size of the neural network. More... | |

| std::string | get_string_architecture () const |

| Returns a yaml representation of the neural network. More... | |

| void | save (std::string directory_name) |

Creates the directory directory_name using mkdir. More... | |

| void | load (std::string directory_name) |

| Loads a neural network from a previously saved instance. More... | |

Public Attributes | |

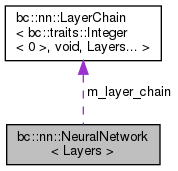

| layer_chain | m_layer_chain |

| double | m_learning_rate = m_layer_chain.head().layer().get_learning_rate() |

| bc::size_t | m_batch_size = 1 |

Detailed Description

template<class ... Layers>

struct bc::nn::NeuralNetwork< Layers >

the Neural_Network

Member Typedef Documentation

◆ is_recurrent

| using bc::nn::NeuralNetwork< Layers >::is_recurrent = bc::traits::any<detail::is_recurrent_layer, Layers...> |

◆ layer_chain

| using bc::nn::NeuralNetwork< Layers >::layer_chain = LayerChain< bc::traits::Integer<0>, void, Layers...> |

◆ self

| using bc::nn::NeuralNetwork< Layers >::self = NeuralNetwork<Layers...> |

Constructor & Destructor Documentation

◆ NeuralNetwork()

|

inline |

Basic Constructor for Neural Networks.

Accepts a variadic parameter pack of Layer-like objects.

Member Function Documentation

◆ back_propagation()

|

inline |

Calls back-propagation on each of the neural_network's layers The time index will be incremented after each call to back_propagation.

Returns: the error of the inputs at the current time_index.

◆ batch_size()

|

inline |

returns the batch_size of the neural network.

◆ copy_training_data_to_single_predict()

|

inline |

Copies the given batch_index from the training cell_state to the inference cell_state.

The time_index is set to zero after copying.

◆ forward_propagation()

|

inline |

Calls forward propagation on each of the neural_network's layers The time index will be set to zero prior to each call forward_call.

Arguments: A Tensor type with the same shape as the first layer's return value of 'get_batched_input_shape()'

Returns: If the neural-network terminates with an Output_Layer forward-propagation will return a 'shallow-copy' of the output.

A shallow-copy will have the behavior of a Tensor class but will act as a handle to the underlying Tensor. (Passing by value will not incur a copy).

◆ get_layer() [1/2]

|

inline |

Returns a const reference to the layer specified by the given index.

◆ get_layer() [2/2]

|

inline |

Returns a reference to the layer specified by the given index.

◆ get_learning_rate()

|

inline |

Returns the current global learning rate.

◆ get_string_architecture()

|

inline |

Returns a yaml representation of the neural network.

◆ input_size()

|

inline |

returns the input_size of the first layer

◆ load()

|

inline |

Loads a neural network from a previously saved instance.

Load expects the neural-network to have been unused in the previous state.

◆ output_size()

|

inline |

returns the output_size of the last layer

◆ predict()

|

inline |

Returns the output of a single batch.

Predict is identical to forward propagation except it does not cache the intermediate values required for back_propagation. The inputs and outputs of each layer however are cached, as they are required for most recurrent neural networks.

◆ save()

|

inline |

Creates the directory directory_name using mkdir.

Than outputs an architecture.yaml file with a description of the neural network. Than iterates through each layer and calls 'save(int layer_index, std::string directory_name)'

◆ set_batch_size()

|

inline |

Sets the batch_size of the entire Neural_Network The intermediate values are discarded when setting the batch_size.

◆ set_learning_rate()

|

inline |

Sets the learning for each layer in the Neural_Network.

Individual layer's learning rates can be set by accessing them via 'get_layer<int index>()' and calling 'set_learning_rate'

◆ single_predict()

|

inline |

Returns the output of a single input.

single_predict is identical to predict except it accepts a non-batched input to forward-propagate on.

◆ update_weights()

|

inline |

Updates the weights of each Layer based upon the current stored gradients.

◆ zero_time_index()

|

inline |

Sets the expression_template time_index to zero.

The time_index dictates what intermediates to return during back_propagation and forward_propagation of a recurrent neural network.

This value is not relevant in non-recurrent neural networks.

Member Data Documentation

◆ m_batch_size

| bc::size_t bc::nn::NeuralNetwork< Layers >::m_batch_size = 1 |

◆ m_layer_chain

| layer_chain bc::nn::NeuralNetwork< Layers >::m_layer_chain |

◆ m_learning_rate

| double bc::nn::NeuralNetwork< Layers >::m_learning_rate = m_layer_chain.head().layer().get_learning_rate() |

The documentation for this struct was generated from the following file:

- blackcat/neural_networks/network.h

1.8.13

1.8.13